🧠 Yoshua Bengio’s $30 Million Mission: Can LawZero Make AI Tell the Truth?

🧩 Introduction

When the Godfather of AI decides that artificial intelligence needs a conscience, the world pays attention. Yoshua Bengio — Turing Award laureate and one of the pioneers behind deep learning — is now leading a new kind of revolution. Not in model architectures or GPU counts, but in ethics, governance, and honesty.

His new non-profit, LawZero, launched with $30 million in seed funding, has a mission that sounds both ambitious and philosophical: to make AI systems that “tell the truth.” In a time when chatbots hallucinate facts and AI-generated misinformation floods the web, Bengio’s initiative represents a turning point — a declaration that intelligence without integrity isn’t progress, it’s peril.

⚙️ The Problem: Smart Machines, Shaky Morals

Modern AI systems are trained on oceans of data but remain largely amoral pattern machines. They predict what’s plausible, not what’s true. Bengio argues that this gap between intelligence and honesty could become catastrophic as models gain autonomy.

He warns that the same algorithms designed to optimize engagement or profit can, without ethical constraints, amplify bias, deception, and even existential risk. His statements echo global anxieties: “If we don’t design AI with human values embedded, we may build something we can’t control — or even understand.”

🧱 The Birth of LawZero

LawZero aims to become the institutional scaffolding for AI ethics — part think tank, part regulatory lab, part engineering studio. Based in Montreal, it will fund and develop frameworks for:

Transparency protocols (so users know why AI made a decision)

Alignment auditing (testing whether models stay truthful under pressure)

Accountability systems (assigning legal responsibility for harm caused by AI)

Bengio envisions AI systems that can explain themselves and abide by universal principles — not just corporate policies. His dream: an “AI Constitution” built from global consensus, open-source ethics, and verifiable behavior.

“AI alignment isn’t just a technical problem,” he says. “It’s a social contract between machines and humanity.”

🌍 The Global Context: An Arms Race Without Rules

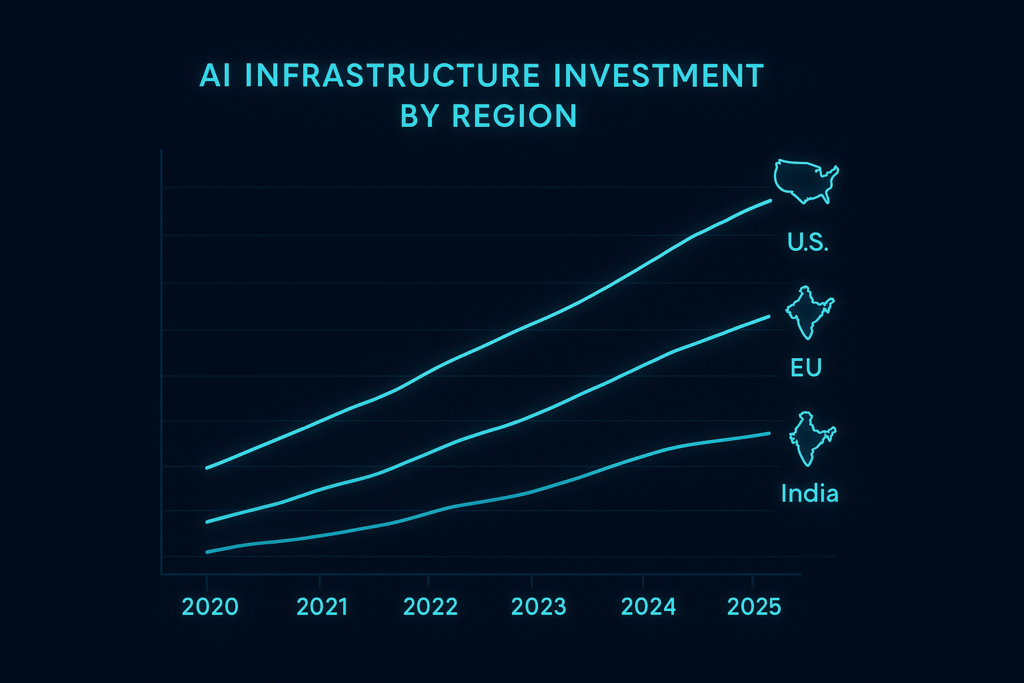

Bengio’s call arrives in a world racing to build ever-larger models — GPT-5, Gemini 2, Claude Next — while barely slowing to test their limits. Governments scramble to regulate, but Big Tech’s innovation cycles outpace lawmaking.

The U.S. invests billions in AI defense and chip R&D.

The E.U. enforces the AI Act, focusing on risk categories.

China accelerates its state-supervised generative systems.

And now, India enters the silicon race with Semicon India Mission, C-DAC’s indigenous AI chips, and Tata’s semiconductor fabs.

In this geopolitical sprint, LawZero stands as a rare pause — a reminder that scaling intelligence without ethics is like building rockets without guidance systems.

India’s Role: From Semicon Mission to Responsible AI

While the U.S. and China dominate AI infrastructure, India is building something equally strategic — AI with accountability. Under the IndiaAI Mission 2025, initiatives like AIRAWAT (AI Supercomputer) and AI Trust Frameworks are emerging.

Institutes such as IIT Madras (with its SHAKTI microprocessor project) and C-DAC Pune are quietly shaping India’s chip sovereignty story. These efforts align with Bengio’s vision: open, ethical AI ecosystems that democratize innovation rather than monopolize it.

For A Square Solutions’ readers — entrepreneurs, technologists, and creators — this moment signals opportunity: the chance to build AI that’s not just powerful, but principled.

🧮 The Technical Frontier: Truthful AI Is Harder Than It Sounds

How do you make an algorithm “honest”? Bengio’s researchers propose three approaches:

Truth-incentive loss functions: Penalizing factual deviations during training.

Multi-model verification: Cross-checking outputs from different architectures for consistency.

Explainable AI (XAI) protocols: Requiring models to generate rationales alongside answers.

It’s a complex problem — truth itself isn’t binary. But LawZero aims to build standardized benchmarks for veracity, much like ImageNet did for vision tasks.

- November 13, 2025

- asquaresolution

- 7:22 pm