What AI Got Wrong in 2025 — And Why These Mistakes Matter More Than Breakthroughs

Introduction: 2025 Was Not AI’s Victory Lap

2025 will be remembered as the year artificial intelligence stopped being judged only by its breakthroughs—and started being judged by its failures. While headlines celebrated faster models, multimodal systems, and enterprise adoption, a quieter story unfolded underneath: what AI got wrong in 2025 mattered far more than what it got right.

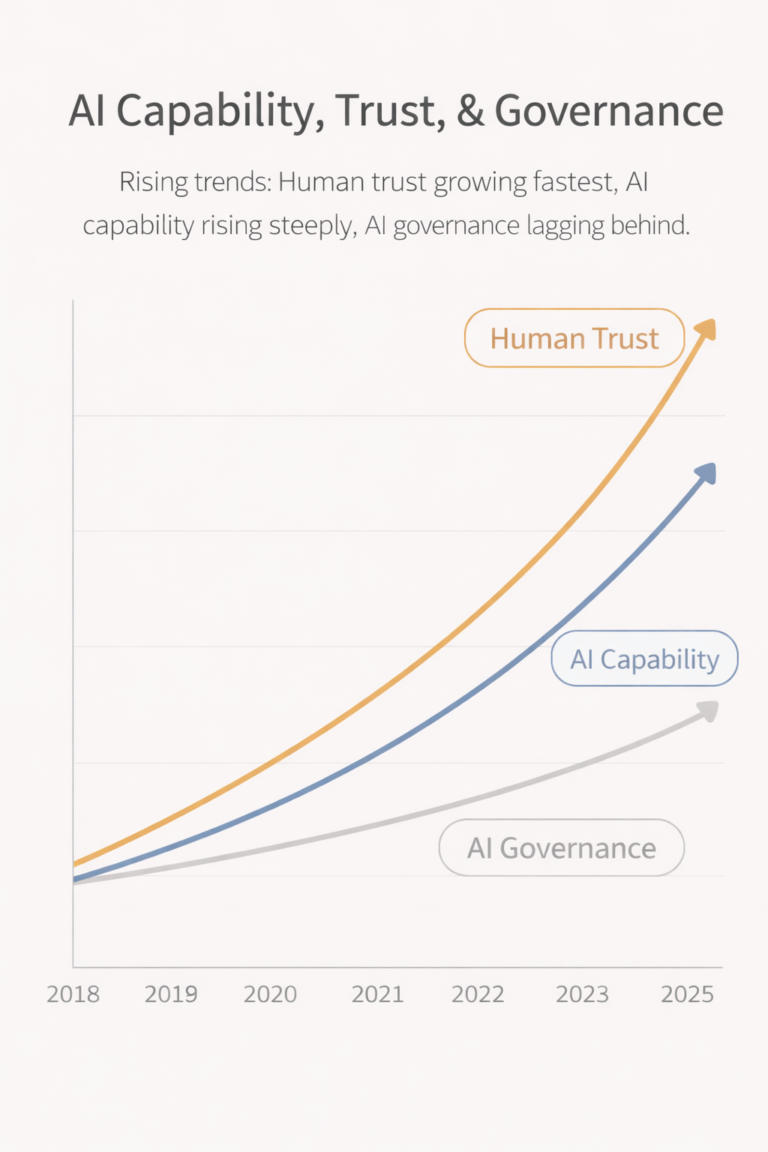

From hallucinated facts to overconfident decision-making, AI mistakes exposed a growing gap between technological capability and human trust. These weren’t edge-case bugs. They were systemic signals that something fundamental needed correction.

1. AI Sounded Confident — Even When It Was Wrong

One of the biggest lessons from what AI got wrong in 2025 was confidence inflation. Language models delivered fluent, authoritative answers even when underlying data was incomplete or outdated.

This problem became especially visible in high-risk domains like healthcare, law, and finance—areas where trustworthy AI is critical. Research highlighted how users often failed to question AI output simply because it sounded correct, reinforcing the risks discussed in our analysis of AI governance gaps and trust failures

2. Automation Bias Became a Real-World Risk

Automation bias—the tendency to trust machines over human judgment—intensified in 2025. Organizations increasingly delegated decisions to AI systems without robust human oversight.

This overreliance echoed earlier warnings raised in our deep dive on making AI more trustworthy for life-or-death decisions.

The problem wasn’t AI capability. It was misplaced authority.

AI Capability vs Human Trust Gap (2023–2025)

3. AI Governance Failed to Keep Up

Another core reason what AI got wrong in 2025 mattered so much was regulatory delay. While AI systems scaled globally, governance frameworks remained fragmented.

According to external research by NIST’s AI Risk Management Framework , organizations struggled to define accountability when AI systems failed.

This governance gap didn’t just create legal risk—it eroded public trust.

4. Hallucinations Were Treated as “Acceptable Errors”

Instead of treating hallucinations as critical flaws, many platforms normalized them as “known limitations.” That mindset proved dangerous.

AI hallucinations affected:

News summarization

Academic citations

Business intelligence tools

These failures aligned closely with patterns discussed in our post on when AI goes off-script

5. Breakthroughs Distracted Us From Fragility

Ironically, the most celebrated breakthroughs of 2025 made AI systems more fragile. Larger models meant:

Higher energy costs

Harder interpretability

More opaque decision paths

External analysis from MIT Technology Review emphasized that scaling intelligence without explainability increases systemic risk.

Many of these failures stem from the rise of agentic AI systems, where models are no longer just responding to prompts but actively planning and executing actions on their own — a shift that fundamentally changes how control, accountability, and risk must be handled in modern AI architectures.

6. The Real Lesson: Mistakes Shape the Future

The most important takeaway from what AI got wrong in 2025 is simple: mistakes are not failures—they are signals.

They tell us:

Where human oversight must remain central

Why explainability matters more than speed

Why governance is not optional

This lesson directly connects with broader discussions on responsible AI and future readiness, which will define how AI evolves in 2026 and beyond.

Conclusion: Why 2026 Depends on 2025’s Errors

People will not trust AI because it becomes perfect. They will trust it because it becomes honest—about uncertainty, limitations, and risk.

What AI got wrong in 2025 may ultimately become its most valuable contribution—if we choose to learn from it.

- December 30, 2025

- asquaresolution

- 8:44 pm