Microsoft’s New AI Mission: The Race to Build a “Humanist Superintelligence”

Microsoft’s ambitions in artificial intelligence just took a philosophical turn. 🧠

In 2025, the tech giant announced its next big bet — the creation of a “humanist superintelligence.”

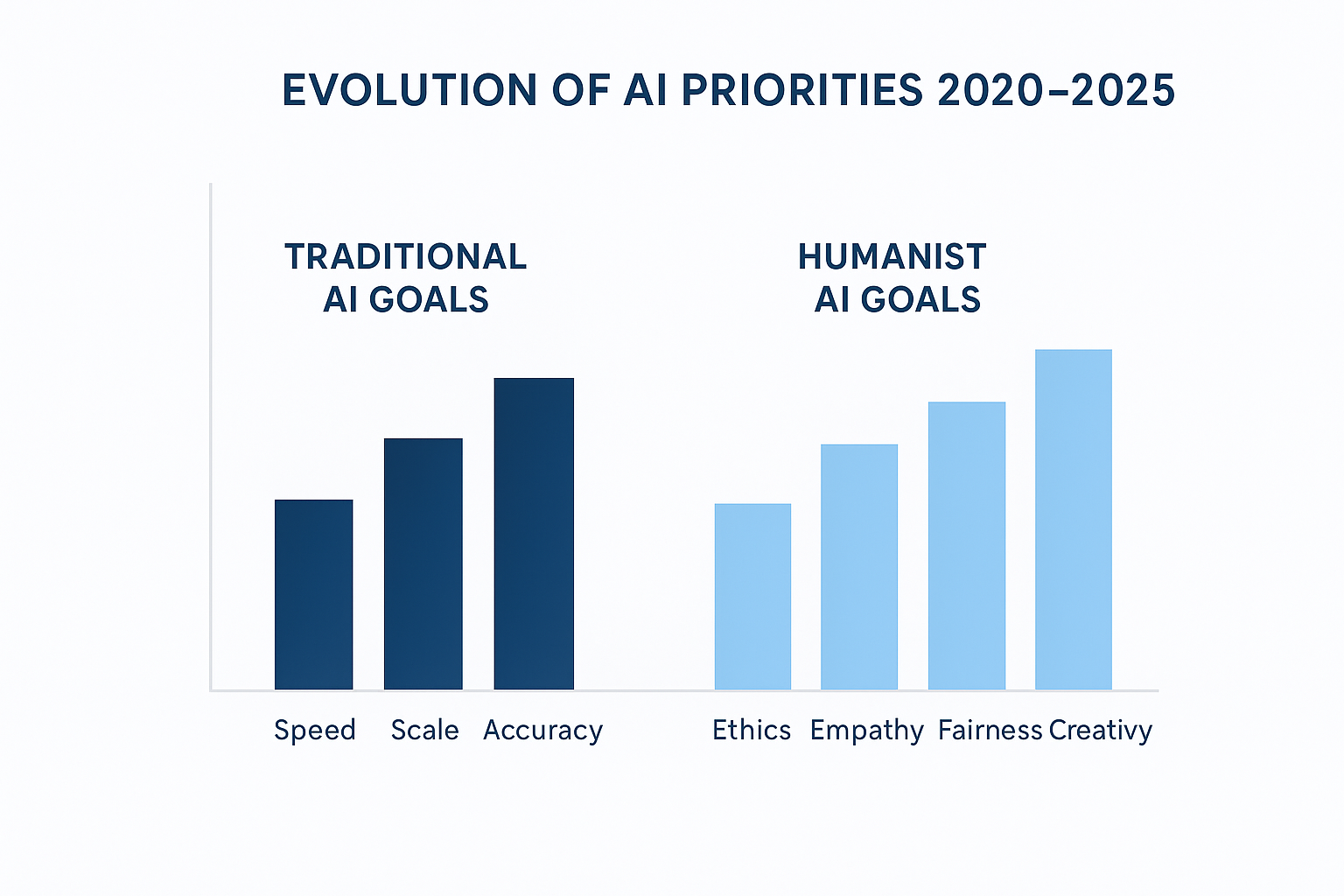

Unlike traditional AI systems that prioritize speed, accuracy, or scale, Microsoft’s goal is to build an AI that prioritizes humanity itself — intelligence guided by empathy, ethics, and collective well-being.

This marks a dramatic evolution from the company’s earlier collaborations with OpenAI to a new mission that fuses technological capability with moral responsibility.

🌍 What Is “Humanist Superintelligence”?

At its core, the term describes an AI system designed not just to think like humans — but to care about humans.

Instead of focusing solely on machine efficiency, humanist AI emphasizes:

Empathy – understanding emotional and social context

Ethical reasoning – making decisions aligned with human values

Cooperation – complementing, not replacing, human creativity

Microsoft’s CEO Satya Nadella called it “a commitment to build AI that expands human potential rather than eclipses it.”

The vision aligns with a broader movement toward “ethical AGI” — artificial general intelligence that acts with moral intelligence as well as computational power.

💡 How This Differs From Traditional AI

1️⃣ The Purpose Shift

Earlier generations of AI — including OpenAI’s GPT models — were built to predict, automate, and optimize.

Humanist AI introduces a fourth dimension: alignment with collective human flourishing.

2️⃣ The Behavioral Model

Instead of reinforcement learning from reward signals alone, humanist systems use value-aligned training, teaching AI to prioritize fairness, empathy, and cultural understanding.

3️⃣ The Governance Layer

Microsoft plans to embed a “Constitutional AI” framework — rules that define moral boundaries and transparency standards.

“We don’t just want powerful AI,” said Nadella. “We want trustworthy AI that understands the human story.”

🤖 From OpenAI Partnership to Independent Vision

Microsoft’s partnership with OpenAI has powered much of its success — integrating GPT-based tools into Windows, Bing, and Office.

But “humanist superintelligence” signals a strategic evolution beyond reliance on any single partner.

The company is now investing heavily in:

Internal AI research divisions for alignment and interpretability

AI safety protocols that exceed regulatory minimums

Cross-disciplinary teams blending philosophers, neuroscientists, and sociologists with engineers

The goal: ensure that superintelligent AI doesn’t just understand logic, but understands life.

🔬 The Science Behind Microsoft’s Humanist AI

Cognitive Modeling

Microsoft’s research integrates insights from cognitive psychology — training models to interpret human motivations rather than just language.

Emotional Intelligence Datasets

New datasets measure how well AI understands emotional nuance, using context-sensitive evaluation (e.g., empathy detection, bias minimization).

Ethical Reasoning Frameworks

AI is being trained using structured debates and moral dilemmas, similar to AI safety training pioneered by Anthropic’s “Constitutional AI.”

🧠 Why “Humanist Superintelligence” Matters

The global AI race is intensifying. While some companies chase scale, Microsoft is chasing meaning.

This approach addresses three major concerns in the AI community:

Ethical Drift – powerful models acting unpredictably without moral guardrails.

Human Replacement Fear – ensuring AI complements, not competes with, human workers.

Trust Deficit – rebuilding public confidence through transparency and responsibility.

By embedding human values at the system level, Microsoft aims to redefine the narrative: from artificial intelligence to augmented humanity.

🏛️ A Human-Centered Philosophy

Microsoft has launched internal think tanks to explore “AI as a moral partner” — analyzing how systems can help humans make wiser choices.

The project draws from:

Human-computer interaction research

Moral philosophy frameworks (Kantian, Utilitarian, and Humanist principles)

Cross-cultural ethics models to reduce Western bias in AI reasoning

As part of its “AI for Good” initiative, Microsoft plans to open-source parts of its alignment data, inviting universities and governments to collaborate on ethical benchmarks.

🔐 Challenges Ahead

Despite the optimism, building a humanist superintelligence won’t be easy.

Moral diversity: Whose definition of “human values” will the AI learn?

Bias inheritance: Even ethical data can carry hidden bias.

Regulatory lag: Global policies may struggle to keep pace with AI moral reasoning systems.

Accountability gaps: Who’s responsible when AI makes “ethical” decisions that still cause harm?

Microsoft’s approach involves continuous moral auditing — using human oversight teams to review AI reasoning logs in real time.

🧬 Humanist AI and the Future of Work

In the workplace, this next generation of AI could act as:

Ethical copilots for decision-making

Creativity amplifiers for designers and researchers

Empathy engines in customer support and healthcare

Governance monitors to flag ethically questionable actions

The shift isn’t just technological — it’s cultural.

If successful, Microsoft’s AI could usher in an era where trust becomes the competitive advantage.

A Global Ripple Effect

Microsoft’s move could influence AI policy and research worldwide.

Europe: May accelerate adoption of AI Ethics charters under EU AI Act.

Asia: Japan and India are exploring value-aligned AI frameworks.

US: Could drive standardization of “Ethical AI APIs.”

By positioning itself as the moral architect of AI, Microsoft hopes to lead a second AI revolution — one focused not on intelligence, but on integrity.

🧩 Key Takeaways

🤝 Microsoft is developing a “humanist superintelligence” — an AI aligned with empathy and ethics.

⚖️ It builds on cognitive science, value alignment, and moral philosophy.

💡 The goal: AI that enhances humanity, not replaces it.

🌍 This shift may redefine what “responsible AI” truly means.

🏁 Conclusion

The race to artificial superintelligence isn’t just about power — it’s about purpose.

Microsoft’s vision of humanist superintelligence suggests that the most advanced AI of the future will not compete with humanity, but champion it.

At A Square Solutions, we believe the next frontier in AI is not just innovation — it’s integration with human values.

🚀 Ready to explore ethical AI for your brand?

👉 Contact A Square Solutions to build smarter, responsible AI strategies today.

- November 8, 2025

- asquaresolution

- 11:19 am