A landmark court case is testing a question that has long been debated but rarely litigated: Can social media platforms be held legally responsible for mental health harm caused by addictive design?

The trial focuses on Instagram and YouTube, examining whether algorithm-driven engagement features knowingly amplify anxiety, depression, and compulsive behavior — especially among young users.

⚖️ What the Trial Is Really About

This is not a free-speech case. It centers on:

Product design choices

Algorithmic amplification

Psychological impact evidence

Plaintiffs argue that platforms prioritized engagement metrics over user well-being, despite internal research warning of harm.

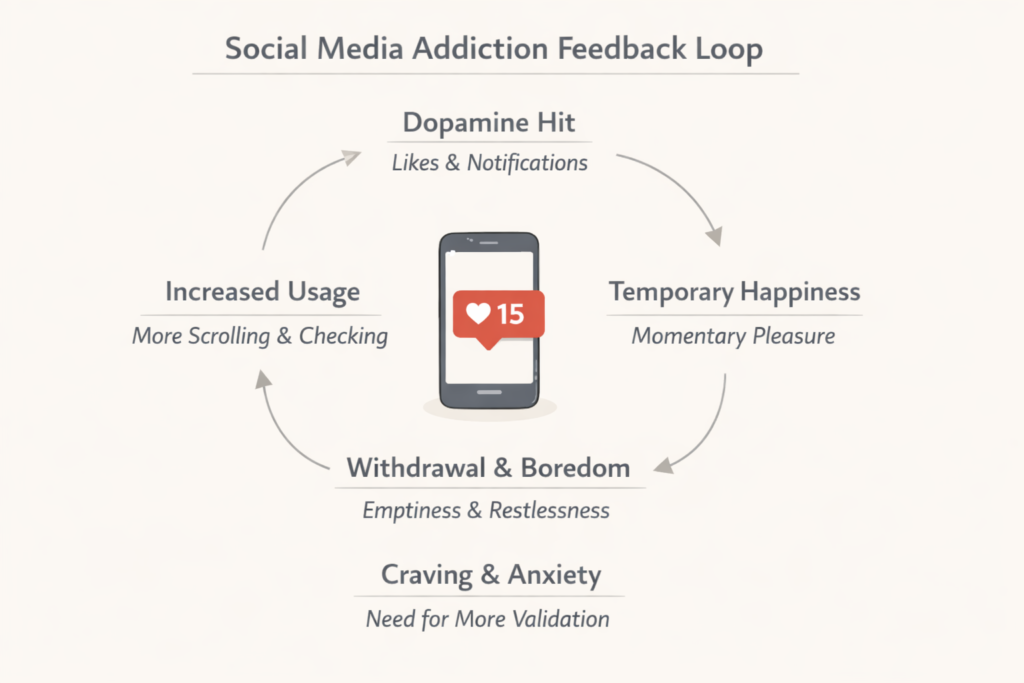

🧠 The Addiction Mechanism Explained

Features under scrutiny include:

Infinite scroll

Recommendation loops

Dopamine-triggering notifications

These mechanisms mirror patterns discussed in our deep dive on how AI systems influence human behavior, where automation subtly shapes decision-making.

🧑⚖️ Can Big Tech Actually Be Liable?

The legal hurdle is proving foreseeability and negligence. If courts accept that platforms understood the risks and failed to act, it could open the door to:

Regulatory fines

Design restrictions

Global precedent cases

This mirrors broader accountability debates seen in emerging AI regulation, including technology oversight frameworks now forming worldwide.

🌍 Why This Case Matters Globally

A single verdict could:

Reshape platform design standards

Influence youth-protection laws

Force transparency in algorithm research

It signals a shift from “user responsibility” to corporate accountability.

- February 10, 2026

- A Square Solutions

- 11:39 pm