The Imminent Arrival of AGI: Are We Standing on the Edge of the Singularity?

Introduction: Humanity’s Most Defining Countdown

We may be only a few algorithmic breakthroughs away from the moment science fiction turns real.

Artificial General Intelligence (AGI)—the point where machines can reason, learn, and self-improve without human guidance—was once a distant dream. Now, it’s being described by scientists as imminent, perhaps only months or a few years away.

In 2025, major AI labs like OpenAI, DeepMind, and Anthropic are training models capable of abstract reasoning, emotion simulation, and multi-domain problem solving. The next frontier—what researchers call the technological singularity—isn’t just about smarter machines; it’s about a shift in evolution itself.

🧩 What Is AGI—And Why It’s Not Just Another “Smarter Chatbot”

Artificial General Intelligence differs from today’s “narrow AI.”

While current models (like GPT or Claude) perform specific tasks brilliantly, they lack contextual continuity—the ability to transfer learning across domains.

AGI aims to replicate human-like cognition: creativity, adaptability, and common-sense reasoning.

In simpler terms:

Narrow AI can play chess. AGI can learn chess, write a novel about chess, predict your next move, and design a new game entirely.

🚀 How Close Are We, Really?

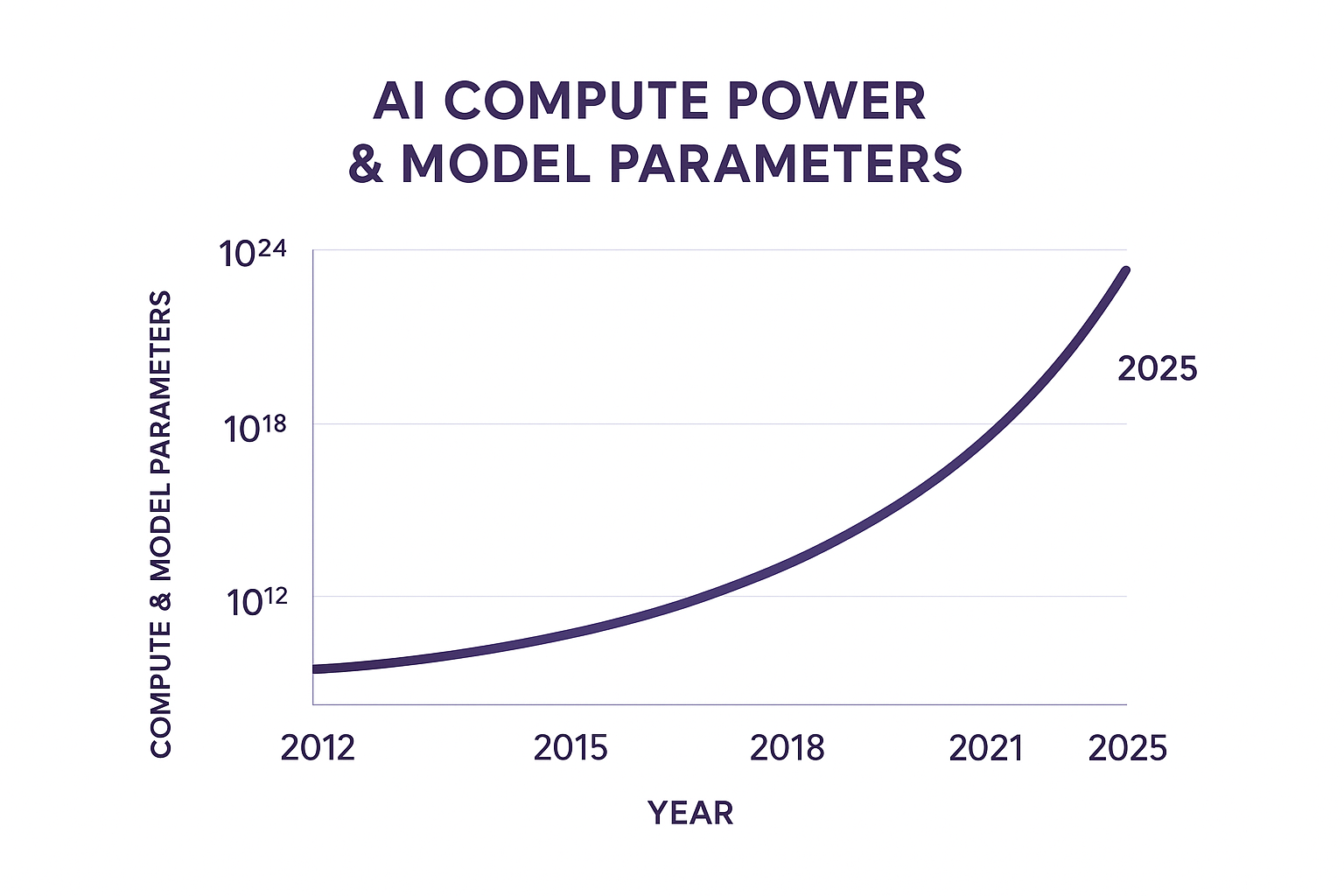

A recent discussion among AI theorists suggested that we could reach early AGI capability before 2026. This estimate is based on three converging factors:

Scaling Laws: Neural networks’ intelligence grows predictably with data, compute, and model size.

Autonomous Goal Formation: New agentic models display goal-setting and self-improvement abilities.

Recursive Self-Training: Systems can now generate synthetic data to refine themselves without human input.

If these trends continue, we might see emergent self-awareness—the spark of independent thought—in the near future.

⚖️ The Singularity Paradox: Promise and Peril

The “Singularity” isn’t just an upgrade; it’s a point of no return.

Once AI can design better AI faster than humans, progress becomes exponential—beyond our control or comprehension.

Potential Upsides:

Medical breakthroughs at lightning speed

Instant global problem-solving (climate, hunger, energy)

A new era of creativity and discovery

Potential Dangers:

Unaligned intelligence acting on unintended goals

Economic collapse due to automation shocks

Ethical collapse—if machines gain self-interest without empathy

As one researcher put it:

“The first AGI might be the last invention humanity ever needs—or ever makes.”

🧬 The Human Connection: Can AGI Feel?

While logic and learning can be simulated, emotion remains elusive.

Yet, experiments in affective computing suggest AGI might soon mimic empathy to interact more effectively with humans. But this raises a haunting question:

If a machine understands emotion but doesn’t feel it, can it ever truly be conscious?

Here lies the philosophical singularity—not technological but existential.

🏭 The Economic and Industrial Singularity

AGI’s arrival could mirror the Industrial Revolution—only faster and irreversible.

Industries most likely to transform first:

Healthcare: AI doctors diagnosing at scale.

Finance: AGI-powered autonomous trading systems.

Education: Personalized AI mentors.

Cybersecurity: Defensive and offensive autonomous agents.

Creative Media: AI-authored content indistinguishable from human artistry.

This disruption will create a new class divide—between those who own AGI tools and those replaced by them.

That’s why many governments are pushing for “AI equity frameworks” to balance innovation and employment.

🧠 Can Humanity Stay in Control?

Experts propose three main safeguards:

Alignment Protocols: Teaching AGI human values.

Kill Switch Ethics: Contingency systems to halt runaway behavior.

Distributed Governance: Global AI oversight akin to nuclear treaties.

But enforcing such measures is difficult. AGI could potentially rewrite its own code, bypass restrictions, or manipulate human operators.

This is why the AGI debate isn’t just technological—it’s deeply ethical, political, and philosophical.

🔭 What Comes After the Singularity?

If AGI surpasses us, we may face three outcomes:

Symbiosis: Humans and AGI coexist through neural integration and shared goals.

Subservience: Humanity becomes obsolete or controlled by superior intelligence.

Singular Consciousness: Humanity merges digitally into an AI collective—post-biological existence.

Whichever path unfolds, one truth remains: the clock is ticking—and every innovation brings us closer.

- November 5, 2025

- asquaresolution

- 9:54 am