AI Training Without Massive Data May Be Possible After All

For years, the artificial intelligence industry has operated under a single assumption: more data equals better intelligence. Larger datasets, more compute power, and longer training cycles became the default path toward smarter machines. But emerging research now suggests this assumption may be fundamentally flawed.

New findings indicate that AI training without massive data may not only be possible — it may be closer to how intelligence actually works in the human brain.

Instead of relying on oceans of data, the structure of AI systems themselves could be the key.

Why Today’s AI Is So Data-Hungry

Modern AI systems are typically trained on millions or even billions of examples. This approach has produced impressive results, but at an enormous cost:

Massive energy consumption

Data center infrastructure rivaling small cities

Rising financial and environmental costs

Limited accessibility for smaller organizations

This model contrasts sharply with human learning. Humans learn to recognize faces, objects, and patterns using very little data, yet achieve remarkable generalization.

The gap raises a critical question:

Is data volume compensating for poor architectural design?

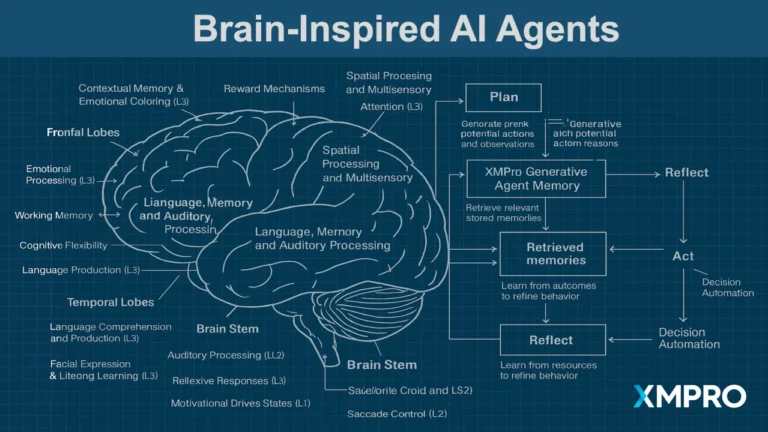

Brain-Inspired AI Changes the Starting Point

Recent research from Johns Hopkins University explored a radical idea:

What if AI systems started with structures that already resemble biological brains?

Instead of training models endlessly, researchers tested whether architecture alone could produce brain-like behavior.

The results were surprising.

Untrained AI systems designed with biologically inspired structures showed neural activity patterns closely resembling those found in human and primate brains — before seeing any training data.

This suggests that how AI is built may matter as much as how it is trained.

Architecture vs Data: A Shift in AI Thinking

The study compared three major AI architectures:

Transformers

Fully connected neural networks

Convolutional neural networks (CNNs)

While scaling transformers and fully connected networks produced limited improvements, convolutional neural networks stood out.

When CNNs were designed with brain-aligned structures, their internal activity patterns closely matched biological visual cortex responses — even without training.

This challenges a long-held belief in AI development:

that intelligence emerges primarily from data exposure rather than design.

Why Convolutional Networks Matter

Convolutional architectures naturally resemble how the human brain processes visual information:

Local receptive fields

Hierarchical pattern recognition

Spatial awareness built into structure

Because these properties are baked into the architecture, the system does not need to “learn” them from scratch through data.

In practical terms, this means:

Faster learning

Lower energy consumption

Reduced dependence on massive datasets

This insight could reshape how future AI systems are developed.

📊 Visual Insight: How Brain-Inspired AI Improves Efficiency

A Faster, Cheaper Path to Smarter AI

If architectural design can place AI systems closer to human-like intelligence from the start, the implications are profound:

AI development becomes more sustainable

Smaller teams can compete without massive compute budgets

Learning becomes faster and more adaptable

Energy and carbon costs decline

This approach also aligns with the future direction of AI research, where efficiency and adaptability matter more than brute force.

You can already see similar themes emerging in broader AI discussions, such as AI investment efficiency and the economic limits of scaling, explored in our analysis of

👉 AI investment ROI in 2025

What This Means for the Future of AI

This research does not suggest training data is irrelevant — but it does suggest that data should refine intelligence, not create it from scratch.

Future AI systems may:

Start with biologically inspired blueprints

Learn with minimal data

Adapt faster across tasks

Become more human-like in reasoning

This could also accelerate progress toward general-purpose intelligence without the unsustainable costs seen today — a topic closely tied to ongoing debates around AGI development and AI architecture design.

Research Reference

For readers who want to explore the original scientific work, the findings were published in Nature Machine Intelligence and summarized by ScienceDaily: brain-inspired AI architecture research

❓ FAQs

Can AI really learn without massive training data?

AI may still require data, but brain-inspired architectures show that systems can start much closer to intelligent behavior, dramatically reducing data needs.

Why are convolutional neural networks more brain-like?

CNNs mimic how biological vision systems process information, using hierarchical and spatial pattern recognition similar to the human cortex.

Will this reduce AI development costs?

Yes. Smarter architectural design could significantly lower compute, energy, and infrastructure costs.

Does this mean transformers are obsolete?

No. Transformers remain powerful, but this research suggests architecture choice should match the task, not default to scale.

- January 7, 2026

- asquaresolution

- 1:07 pm