AGI Safety vs Fast Innovation: The Critical 2025–2026 Debate Explained

Artificial General Intelligence (AGI) is no longer a distant concept. In 2025, it has become the center of a global debate—AGI Safety vs Fast Innovation. Big tech leaders like Sam Altman and Mustafa Suleyman have taken opposite sides, shaping what the future of AI could look like.

In this blog, we dive deep into this debate, why it matters, and what it means for 2025–2026.

🔥 What Sparked the AGI Safety vs Fast Innovation Debate?

The rapid acceleration of AI models, hardware, and compute power has pushed humanity closer to AGI than ever. Recent breakthroughs in reasoning models, brain-like architectures, multimodal agents, and autonomous decision systems are forcing a big question:

👉 Should we slow down or speed up?

This clash intensified when Sam Altman expressed strong excitement for AGI advancements, while Mustafa Suleyman issued warnings about rushing toward super-intelligent systems.

The Global Impact of the AGI Safety vs Fast Innovation Debate

The clash between AGI Safety vs Fast Innovation is not limited to Silicon Valley. Countries across Europe, Asia, and the Middle East are now shaping their own strategies for AGI adoption. This is creating a geopolitical race where speed and safety both determine who will dominate the next technological era.

For example, several global research groups believe that nations leading AGI development will influence global economic policy, cybersecurity architecture, digital governance models, and even military strategy. This makes the pace of AGI development a national priority—with innovation offering immense advantages and safety offering long-term stability.

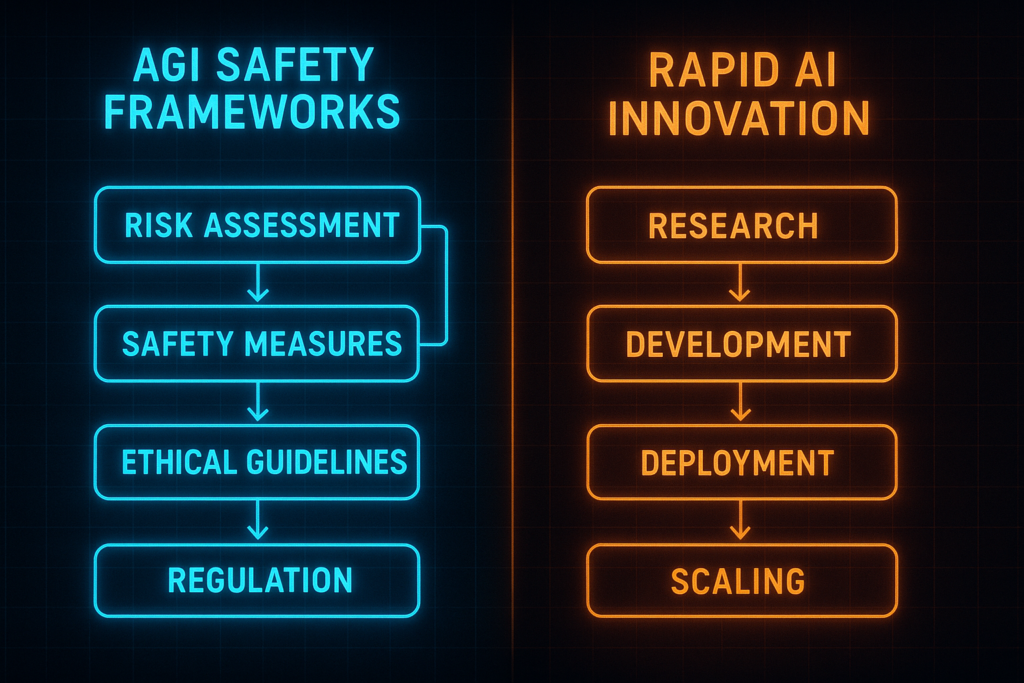

Companies building AGI also face challenges beyond research. They must ensure secure data pipelines, aligned models, transparent audits, and responsible deployment. If they move too fast without oversight, the risks multiply—ranging from misinformation to autonomous decision failures. But if they move too slow, competitors surge ahead and capture industries overnight. This is why many experts argue that a hybrid “speed + safety” framework is the most practical solution for 2025–2026.

Consumers, too, stand at the center of this debate. AGI promises personalized assistants, advanced medical predictions, self-driving ecosystems, and enhanced productivity. But users also fear privacy loss, job replacement, and algorithmic overreach. This emotional tension fuels the global discussion and increases search interest—making AGI Safety vs Fast Innovation one of the most active AI topics online today.

As AGI moves from research labs to real-world deployment, companies must learn to balance ambition with responsibility. Transparent audits, ethical guidelines, and safety protocols can enable innovation without catastrophic risks. Meanwhile, pushing boundaries with new reasoning models, agentic systems, and brain-inspired architectures ensures AGI progress continues.

The final outcome of this debate will define the next decade of technology, economics, and society.

🚀 Sam Altman: Why He Supports Fast Innovation

Sam Altman believes that:

AGI can solve global challenges

(climate, medicine, energy, education)Delaying AGI allows bad actors to catch up

Innovation cycles must continue without heavy restrictions

He argues that slowing AGI development will kill momentum, reduce breakthroughs, and allow other nations or rogue labs to overtake the US-led ecosystem.

🛑 Mustafa Suleyman: Why He Warns Against Rushing AGI

Mustafa Suleyman, current CEO of Microsoft AI, takes the opposite stance:

AGI poses existential risks

Rapid innovation without regulation = dangerous super-intelligence

The world needs binding safety laws, just like nuclear protocols

Companies must be held accountable through AGI safety audits

His approach is simple:

“Move fast, but with extreme caution.”

⚖️ Why This Debate Matters for 2025–2026

This isn’t a tech argument.

This is about:

Global security

Economic survival

Job displacement

Deepfake explosions

AI weaponization

Human identity & autonomy

AGI is the first technology that could replace cognitive labor at scale.

And that’s why safety vs speed is the defining battle of 2025–2026.

⚠ Real-World Risks of Rushing AGI

If innovation happens too fast:

AI systems become uncontrollable

Misaligned AGI could bypass human instructions

Cyberattacks become autonomous

Political manipulation becomes undetectable

🧩 Real-World Risks of Slowing AGI

If safety restrictions become too strict:

Innovation slows

Medical breakthroughs get delayed

Economic growth reduces

Competitor countries leap ahead

AGI monopoly may form

Balancing both sides is the key.

🔮 What the Future Looks Like

The world is slowly moving toward a hybrid model:

✔ Fast innovation allowed

✔ Mandatory safety audits

✔ Global AI treaties

✔ Compute governance

✔ Open AI accountability

🏁 Conclusion

The battle of AGI Safety vs Fast Innovation will define the next decade.

Sam Altman wants AGI now.

Suleyman wants AGI safely.

Both are right—

but the future depends on balance.