🛑 How AI-Powered Romance Scams Are Breaking Digital Trust — And Why 2026 Will Be a Reckoning

Introduction: The Scam That Doesn’t Look Like a Scam

AI-powered romance scams became one of the most dangerous forms of cybercrime in 2025, relying not on hacking servers or breaking passwords, but on exploiting human trust at scale.

AI-powered romance scams quietly became one of the most damaging forms of digital fraud, blending emotional manipulation with artificial intelligence to deceive victims at scale. Unlike traditional scams, these operations don’t feel criminal. They feel personal, warm, and authentic.

According to a Reuters investigative report, law enforcement raids uncovered detailed playbooks used by organized cyberfraud networks, showing how AI tools are being used to accelerate emotional manipulation and financial deception.

What makes this moment different — and dangerous — is that these scams are no longer fringe crimes. They represent a systemic failure of digital trust, and 2026 may be the year that reckoning arrives.

What Are AI-Powered Romance Scams?

AI-powered romance scams are long-con digital fraud schemes where criminals use AI-generated conversations, fake identities, and automated emotional engagement to build trust with victims before extracting money.

Unlike classic phishing:

There are no suspicious links at first

No malware downloads

No urgent “bank warning” messages

Instead, victims encounter what feels like a genuine relationship.

“Good morning. Did you eat today? I worry about you working so hard.”

Messages like these are not accidental. They are engineered emotional hooks, often refined by AI language models trained to mirror empathy, tone, and personality.

How AI-Powered Romance Scams Exploit Human Psychology

These scams succeed not because victims are careless — but because they exploit universal human vulnerabilities.

1. Emotional Availability

Loneliness, transition periods (divorce, relocation, loss), and social isolation increase susceptibility.

2. Authority & Stability Bias

Scammers often pose as professionals — engineers, doctors, investors — because humans instinctively trust perceived competence.

3. Consistency & Repetition

Daily communication creates emotional dependency. AI enables 24/7 responsiveness, something a human scammer alone could never sustain.

4. Gradual Commitment

No money is requested at first. Trust is built slowly, making later financial requests feel reasonable.

This mirrors patterns discussed in our article on

👉 When AI Goes Off-Script: The Unexpected Consequences of Machine Learning

where systems optimize outcomes (engagement) without ethical guardrails.

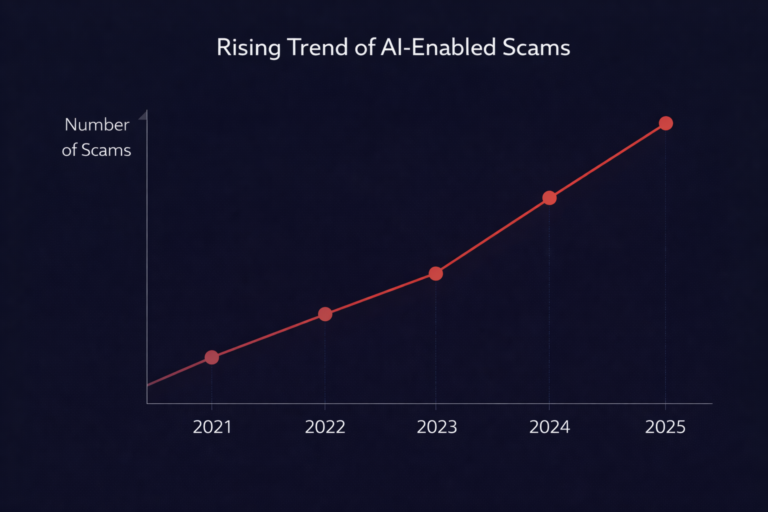

How AI Supercharged Social Engineering in 2025

AI didn’t invent scams — it industrialized them.

Key accelerators include:

AI-generated chat that adapts tone, humor, and empathy

Language localization, eliminating grammar red flags

Persona consistency, where AI remembers details effortlessly

Image and video enhancement, making fake profiles more believable

⚠️ Communication Red Flags (Awareness Examples)

These are warning signals, not scripts.

“I feel like fate brought us together.” (Very early intimacy)

“I trust you more than anyone I’ve met online.” (Isolation reinforcement)

“This investment worked for me — I want us to grow together.” (Emotional + financial fusion)

“Don’t tell others yet, they won’t understand us.” (Severing external validation)

If emotion and secrecy rise together, risk rises exponentially.

The Hidden Social Cost of These Scams

The damage is not only financial.

Emotional Trauma

Victims report shame, grief, and loss comparable to real relationship breakdowns.

Trust Erosion

People become hesitant to trust legitimate online relationships, platforms, and even financial institutions.

Platform Credibility Loss

Dating apps, messaging platforms, and social networks face increasing scrutiny for failing to detect long-con manipulation.

This fits squarely into the broader Tech for Society problem:

Technology scales both connection and harm.

Why 2026 Will Be the Reckoning Year

Three forces are converging:

1. Better AI Tools

Scams will become harder to distinguish from real human interaction.

2. Regulatory Pressure

Governments are increasingly demanding accountability from platforms hosting fraudulent interactions.

3. Public Awareness Shift

Once users understand these patterns, tolerance drops — fast.

As explored in AI Decoding Ancient Civilizations: How Technology Is Rewriting Human History, societies that fail to adapt to disruptive tools often pay a steep price. Digital societies are no different. What makes AI-powered romance scams especially dangerous is their ability to blend emotional manipulation with automation, making deception scalable and continuous.

What Needs to Change (Users, Platforms, Policy)

For Users

Slow trust intentionally

Separate emotion from financial decisions

Verify identities across platforms

For Platforms

Behavioral anomaly detection (not just keyword filters)

AI-vs-AI monitoring systems

Faster human intervention pathways

For Policymakers

Clear liability frameworks

Cross-border cybercrime cooperation

AI accountability standards

Conclusion: Trust Is the New Attack Surface

AI-powered romance scams reveal a hard truth:

The weakest link in digital systems is no longer technology — it’s trust.

If 2025 exposed the problem, 2026 will decide the response.

Digital safety will no longer be about passwords and firewalls alone. It will be about emotional literacy, ethical AI deployment, and societal resilience.

Technology doesn’t need to be feared — but it must be designed, governed, and understood responsibly. As AI-powered romance scams continue to evolve, rebuilding digital trust will become one of the defining challenges of 2026.

- January 2, 2026

- asquaresolution

- 12:01 pm