AI in FMCG Industry (2026): 9 Real Use Cases Driving Revenue, Automation & ROI

The AI in FMCG industry is no longer experimental —

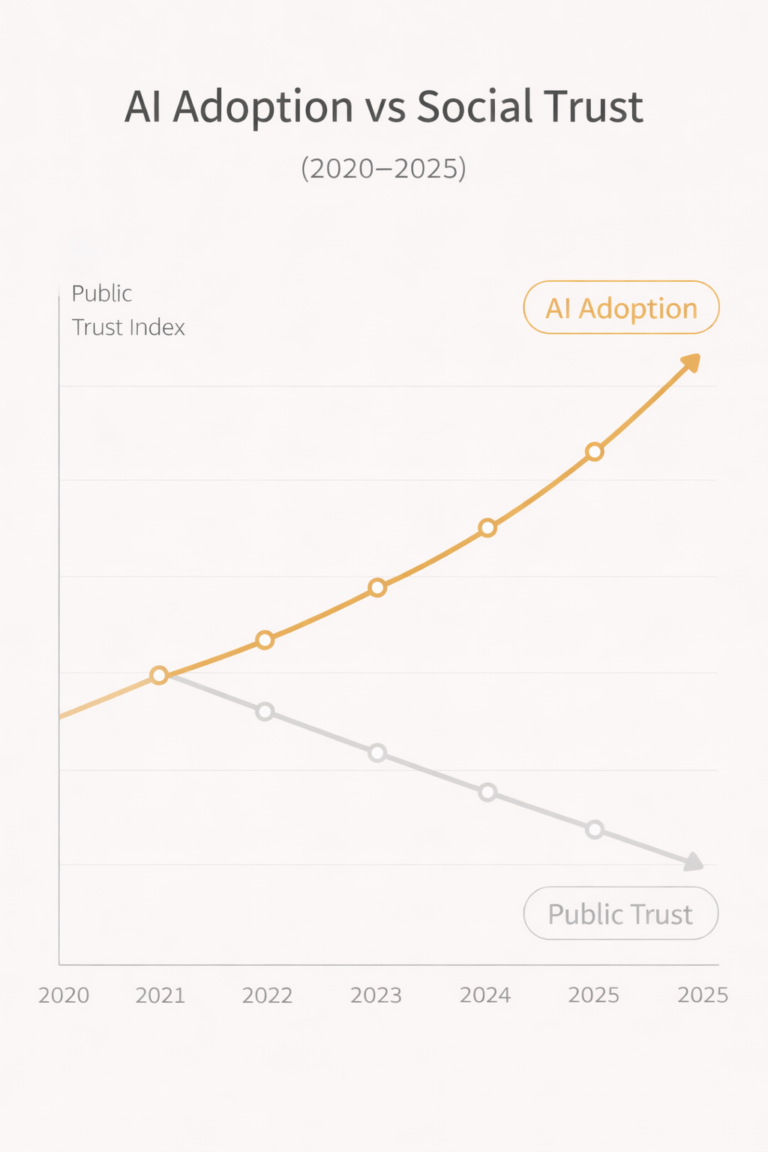

In 2025, artificial intelligence didn’t just accelerate innovation — it quietly reshaped society.

While headlines celebrated faster models, smarter agents, and cheaper automation, a deeper story unfolded beneath the hype.

AI systems entered workplaces, classrooms, hospitals, courts, and governments — often faster than society could adapt. The result wasn’t a dramatic collapse. It was something subtler and more dangerous: a growing social cost that went largely unnoticed.

This article explores the hidden social cost of AI in 2025 — and why 2026 will be a reckoning year, not a breakthrough year.

AI did not cause mass unemployment in 2025.

Instead, it created something more destabilizing: permanent uncertainty.

Roles didn’t disappear overnight — they eroded.

Junior positions vanished first.

Human judgment was downgraded to “override only.”

Workers didn’t lose jobs.

They lost career predictability.

This mirrors patterns we’ve already seen in automation-heavy sectors discussed in our analysis of AI governance and risk systems on asquaresolution.com.

Economic anxiety spreads faster than job loss. By the end of 2025, millions were working alongside AI they didn’t trust — or understand.

Across finance, healthcare, hiring, and law enforcement, AI systems increasingly influenced outcomes — but accountability lagged behind.

When an AI:

denies a loan

flags a transaction

deprioritizes a patient

suppresses visibility online

Who is responsible?

In 2025, the answer was usually: no one clearly.

This accountability gap is one reason regulators globally are now accelerating AI frameworks — a shift already visible in discussions around AI risk management and governance.

One of the least discussed social costs of AI in 2025 was mental offloading.

People increasingly relied on AI for:

decision framing

emotional validation

summarizing reality

This wasn’t laziness.

It was convenience scaling faster than critical thinking.

Psychologists warned of “confidence without comprehension” — people trusting AI outputs they couldn’t evaluate.

External reference (contextual): Research institutions like the Stanford Institute for Human-Centered AI have already flagged long-term cognitive dependency as a societal risk.

AI systems in 2025 didn’t eliminate bias.

They standardized it.

Bias moved from:

visible prejudice → statistical probability

human intent → training data inertia

Because decisions came from “models,” they felt neutral — even when outcomes weren’t.

This made social harm:

harder to contest

harder to audit

harder to emotionally process

A key reason 2026 will be different is growing pressure for explainability and auditable AI systems.

Perhaps the biggest hidden cost of AI in 2025 was lack of consent.

AI was:

deployed by corporations

integrated by institutions

optimized for efficiency

But rarely debated publicly.

Society didn’t opt in.

It was silently upgraded.

This gap is why 2026 will not be about faster AI — but about who decides how it’s used.

2026 will mark a shift from capability obsession to consequence management.

Expect:

stricter AI accountability laws

mandatory transparency for high-risk systems

human-in-the-loop requirements

public pushback against opaque automation

AI will not slow down.

But society will push back harder.

The solution is not less AI.

It is better alignment.

Key principles emerging for 2026:

Human override by default

Explainability as a requirement, not a feature

Social impact audits alongside technical benchmarks

Clear ownership of AI outcomes

Technology must scale responsibility at the same speed as intelligence.

The hidden social cost of AI in 2025 wasn’t a single failure — it was a pattern of quiet compromises.

Convenience over consent.

Efficiency over empathy.

Automation over accountability.

2026 will be the year society demands balance.

Not because AI failed —

but because we finally noticed the bill.

The AI in FMCG industry is no longer experimental —

The First Real Test of Commercial AI in National Security?

Washington Signals Dual Strategy: Economic Pressure and Military Readiness The

The Epstein Files investigation 2026 is once again dominating headlines

The biggest misconception in technology today is that building an

Intelligence did not begin with humans.It began with survival. The